Hi,

I wonder if it is possible to add the legacy outputs to the LBT FaceResult component?

I mean the windowTotalBeamEnergy/BeamEnergy/DiffEnergy/Trasmisitivity.

Thanks,

-A.

Hey @AbrahamYezioro ,

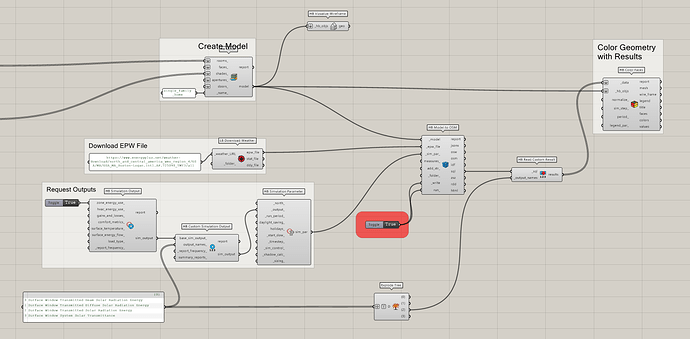

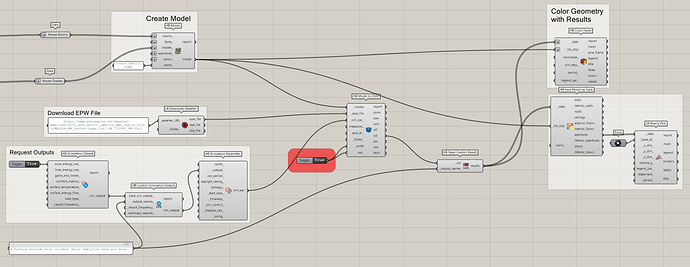

The main reason why I excluded these outputs is because I wanted to keep the components to a manageable size and these outputs aren’t going to be used in any of the major post-processing workflows using energy model results (eg. energy balances, comfort maps, etc.) In light of this, would you be ok just using the workflow to import custom E+ outputs like so?

Window_Transmittance_Outputs.gh (102.0 KB)

Hi @chris,

This is good enough, thanks.

Related and not related … The reason i need those outputs is because the (future to be implemented, i hope) window shading control, with the radiation heating on the window as condition to close/open the shade/blinds. So keeping this in mind i have 2 questions:

- Do you have an estimate when this shading control will be implemented as in the Legacy EPWindowShades component?

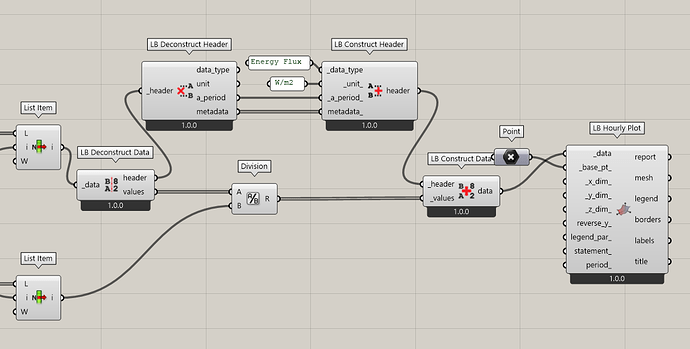

- I want to get the radiation in w/m2 (as the condition for the shading control requires). I did the calculation decomposing the results collection. The question is when i want to re-create the collection with the new results i need to define the header. Do you have a quick way of just changing the units of the original header (kWh to W/m2)?

Attached what i did up to the step of creating the data collection.

Thank again,

-A.

Window_Transmittance_Outputs_AY.gh (151.9 KB)

I should clarify that the main workflow where the solar outputs were used in Legacy was the Energy Shade Benefit like you see in this example and we’ll definitely have support for this in LBT honeybee eventually. However, I think we’ll try to do it using a recipe (just like the Radiance recipes) and all of the output-requesting will be incorporated into the recipe execution.

In any case, to answer your two questions:

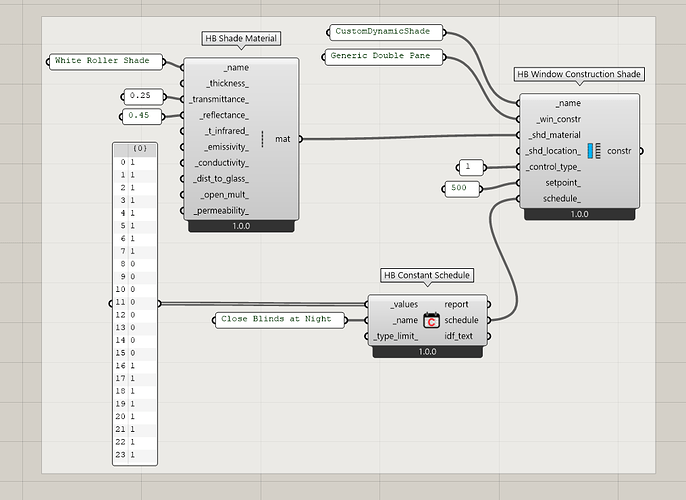

- There’s already support for Dynamic Shades. It’s just done through

WindowConstructionShadenow instead of a monolithic component that crams the louver-generation with detailed geometry into the same component as the one that does dynamic blinds. Here’s how you can assign the schedule usingWindowConstructionShade:

- If you want values in W/m2, then there are two things I would recommend: one is to just request a different output from the simulation that’s in W. All of the outputs that we are currently requesting have a “Rate” version that’s in W. Second, can post process the data collections to be normalized by area with the following:

Window_Transmittance_Outputs_AY_CWM.gh (125.7 KB)

FYI, there are EnergyPlus outputs that have incident window radiation normalized per area if you’re willing to use that as your starting point instead of the transmitted radiation. I also think I can add a surface normalizing component that works like the floor normalizing component if this is a common use case.

Thanks a lot @chris,

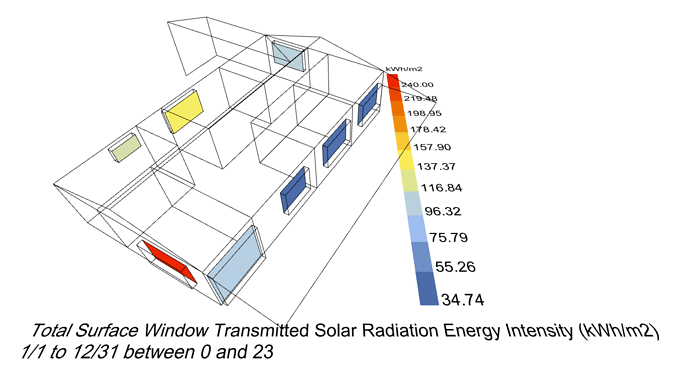

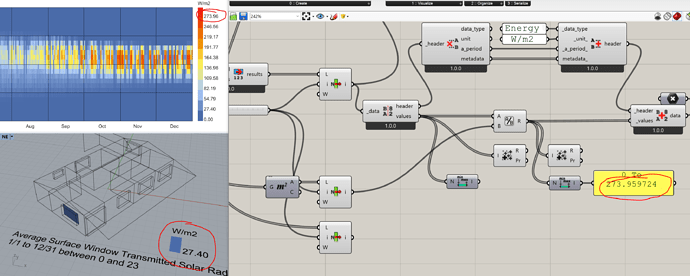

This approach made me notice that there is an error in the calculation of the legend values for the ColorFaces component when the units come to W/m2, as you can see here:

Can be?

I was looking and looking and the closest output i’ve found is:

Surface Outside Face Incident Solar Radiation Rate per Area

From there i can separate the windows for visualizing them with the ColorFaces. I can’t manage to separate numerical results for just the windows for visualizing with the HourlyPlot. Do you have any ideas how to do that (i know you do … ![]() )?

)?

Thanks,

-A.

So the first issue is not a bug but it’s a pretty remarkable coincidence that the two values for this case differ almost perfectly by a factor of 10. The values that you see in the “Color Faces” visualization are the AVERAGE W/m2 that the face experiences over all hours of the year. This is different from the PEAK W/m2 that the face experiences on its most sun-exposed hour of the year. If you were to scroll through the “Color Faces” visualization on an hour-by-hour basis (instead of viewing the default average over the year), you will see that you get some hours where the W/m2 gets above 200.

I definitely have some ideas for separating the results and this is wish that is easily granted. I opened an issue and I’ll try to get to it later today:

I think I can also work an option into the component to normalize the data by the area of the face/aperture as part of the matching and separation process.

The component is done but I made some changes to the core library to keep the code inside the component nice and elegant. Once all of the changes trickle through our integration tests tomorrow, I will add a sample with the new component.

Thanks @AbrahamYezioro . The core library changes kept me up later than usual.

Everything has now made it through the integration tests so, if you run the Versioner component and restart Rhino, you will be able to open this sample file that has a component to separate the results by face/object type.

SeparateAndNormalizeResults.gh (108.1 KB)

You’ll see it also includes the area normalization option, which you can use with some of the other surface-level outputs of EnergyPlus that don’t have built-in normalization.

Hi @chris,

Tested the normalization option and it is giving this error:

Runtime error (UnboundNameException): global name ‘new_meta’ is not defined

Traceback:

line 243, in normalize_by_area, “C:\Users\ayezi\ladybug_tools\python\Lib\site-packages\ladybug\_datacollectionbase.py”

line 117, in script

It happens when you ask for an output that is not already calculated for floor area. Small bug i guess.

-A.

That was a bug that was in the first commit I made but I corrected it after an a couple of hours. The second fix must have taken longer to get through our tests for some reason. Anyway, rerun the versioner and you should be able to run it without issues.

Hello Mr @chris Mackey,

I have found this post could be so useful that it could solve most of the problems that I have had for the last few weeks. However, I have had a question with regards to the posted discussion. After downloading the attached file called “SeparateAndNormalizeResults.gh”, I attempted to use the versioner component, but I have not been sure which version should be used to let the normalization option work.

Could I obtain a help, please?

Thank you

Hi @Syo

Just use the latest version and use the “LB Sync Grasshopper File” to update the definition for the version you have. It should work