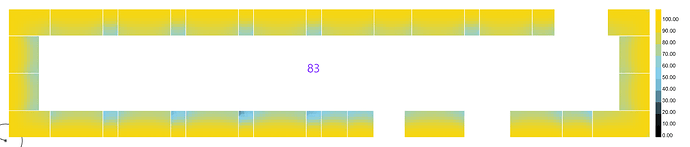

It turns out you were right Nathaniel. HB1.1 is dividing the simulation per grid. I rerun the simulation as a whole and here is the final result, with a total run time of 2 min!

With that out of the way, the question now is: how we can group all the grids for the simulations and ungroup them at the end for data processing of individual rooms?