Hello everyone,

I’m trying to evaluate solar radiation on the facade of a building using Honeybee Legacy. I am using the grid based analysis so that I get cumulative radiation values for each point of the grid over a year.

I tested multiple radiance parameters settings variations according to the recommended options http://radsite.lbl.gov/radiance/refer/Notes/rpict_options.html.

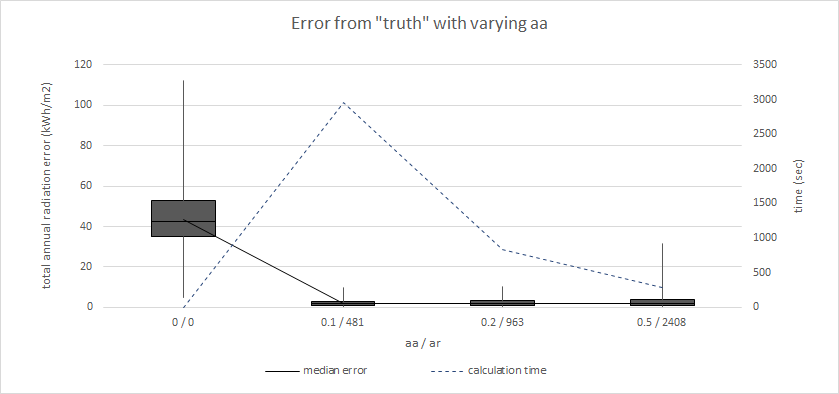

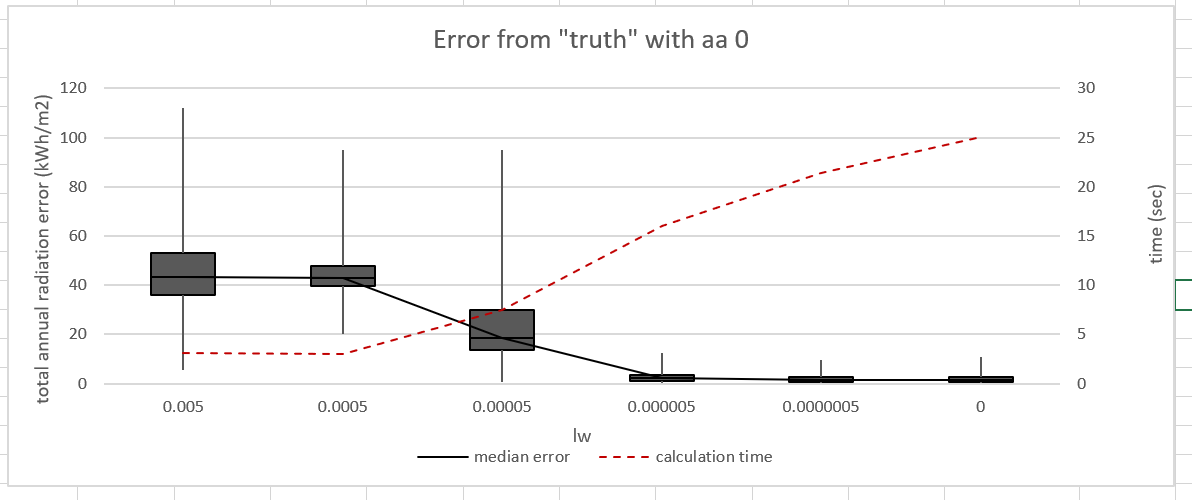

Generally, when I apply different settings (Min, Fast, Accur) the accuracy naturally increases along with the simulation time. Interesting thing happens, when I apply the Maximum settings. Then the simulation time drastically decreases with similar accuracy as when simulated on the “Accurate” settings.

For example in my case I’m simulating 560 points on “Accurate” settings for approximately 11 seconds and with the “Max” settings the time drops to 2 seconds. Then when I change the -aa and -ar to something else than 0 the time increases to up to 18 minutes with the same results that I was getting from the “Max” simulation.

Can someone explain to me, why is this happening?

Thank you very much, Ondrej