Hello everyone,

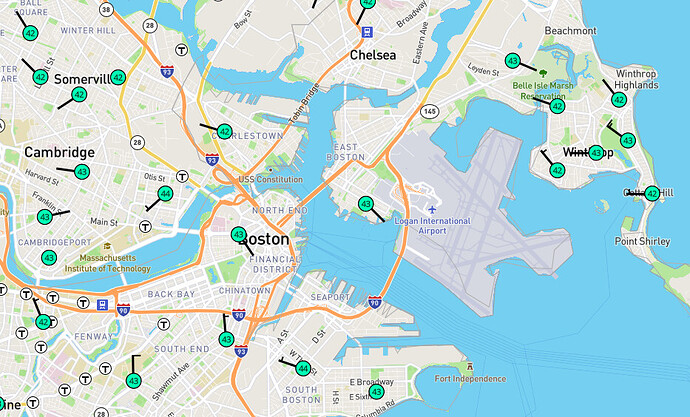

For the last year or so, I’ve been working on making historical weather reanalysis data (e.g. ERA5 Reanalysis) more accessible. Despite the comprehensiveness and the quality of the data, it’s not widely used due to the effort required to process the data.

I’ve recently launched a service to access this data more easily and looking for beta testers. You can sign up at https://oikolab.com, creating an account and adding a subscription to ‘Starter’ to get access to historical reanalysis data for any place from 1980 onwards.

This enables one to quickly look at climate trends and develop tools such as:

-

Climate Explorer - how has climate changed where you live in the last 40 years?

-

EPW generator w/ Ladybug Python library - Chris created this Python script to demonstrate how you can generate EPW file for any location by just specifying the location data and the year and accessing the data. I modified the script a little for readability.

Please note that once fully launched, this will be a paid service, although the price will be substantially cheaper than currently existing services. I think it’s particularly concerning that as climate change progresses, it’s becoming difficult to even define a ‘typical meteorological year’ based on the past history so the idea is to enable energy modellers to easily generate 30~40 years worth of AMY as required for any location. For instance, the ‘Starter’ subscription that you can sign up for free at the moment would allow you to generate up to 100 AMY files per month.

I’m particularly interested in feedback with regards to the use case (how often do you use historical weather data or need to generate EPW?). If you have any questions, comments or suggestions, please feel free to reach out to me at joseph.yang@oikolab.com.

Thanks!

Joseph